Raw Images FAQ

Raw Images: Frequently Asked Questions

Why does NASA turn on the cameras before spacecraft get to their destinations?

While a spacecraft is traveling to its destination (a period referred to as "cruise") the scientists and engineers on the spacecraft's imaging team need to make sure the camera (or cameras, if there is more than one) is working properly after the shaking and rattling of launch, and exposure to the extreme environment of space. They also need to determine if the spacecraft can properly point its cameras at specific targets. Plus, it's also a good time to test software designed to calibrate the cameras once they are in space. For these reasons, imaging teams take pictures of specific stars and planets with known positions, colors, and brightnesses to make sure that photos taken by the cameras are well calibrated and can confidently be used for scientific study.

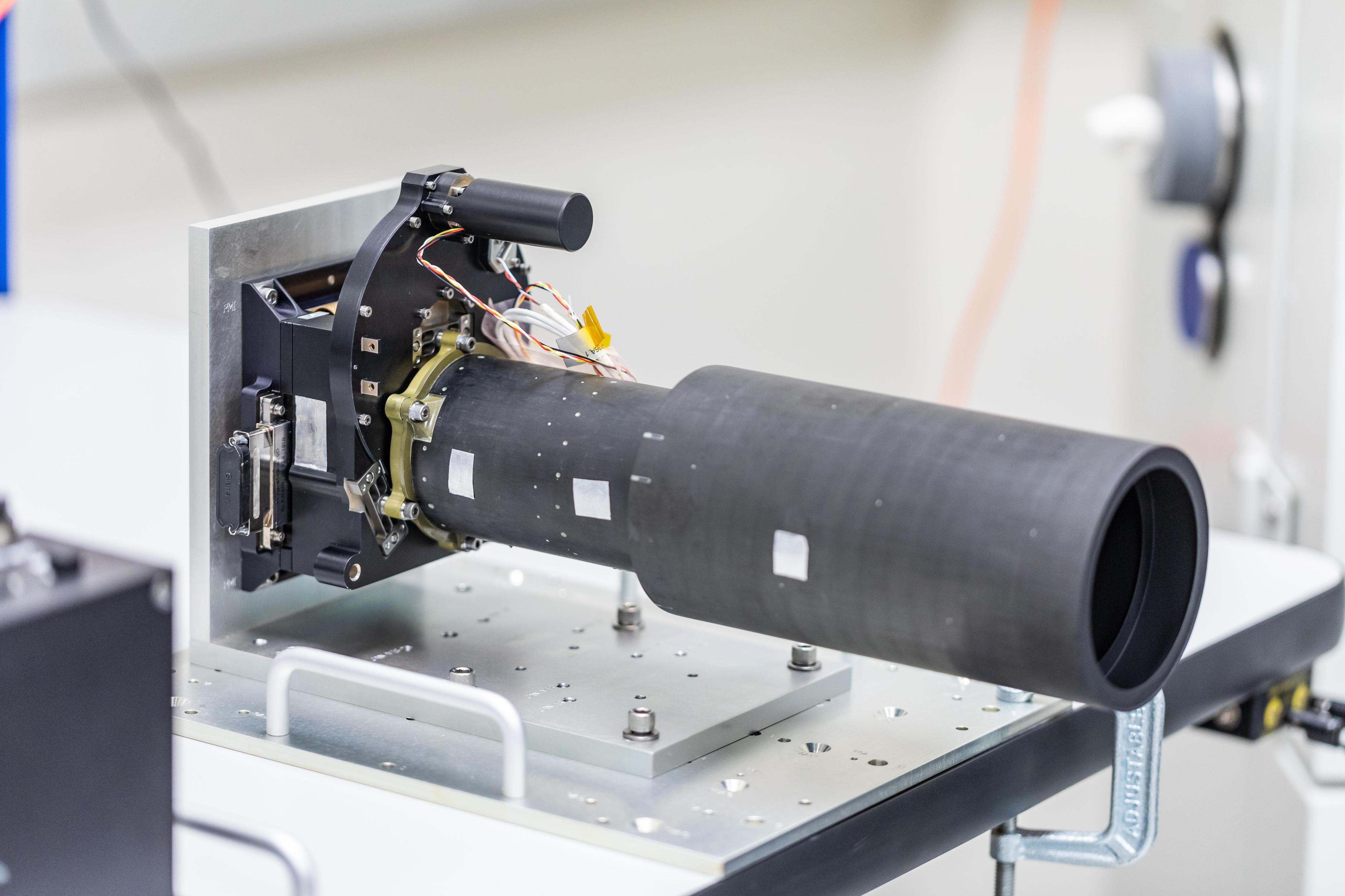

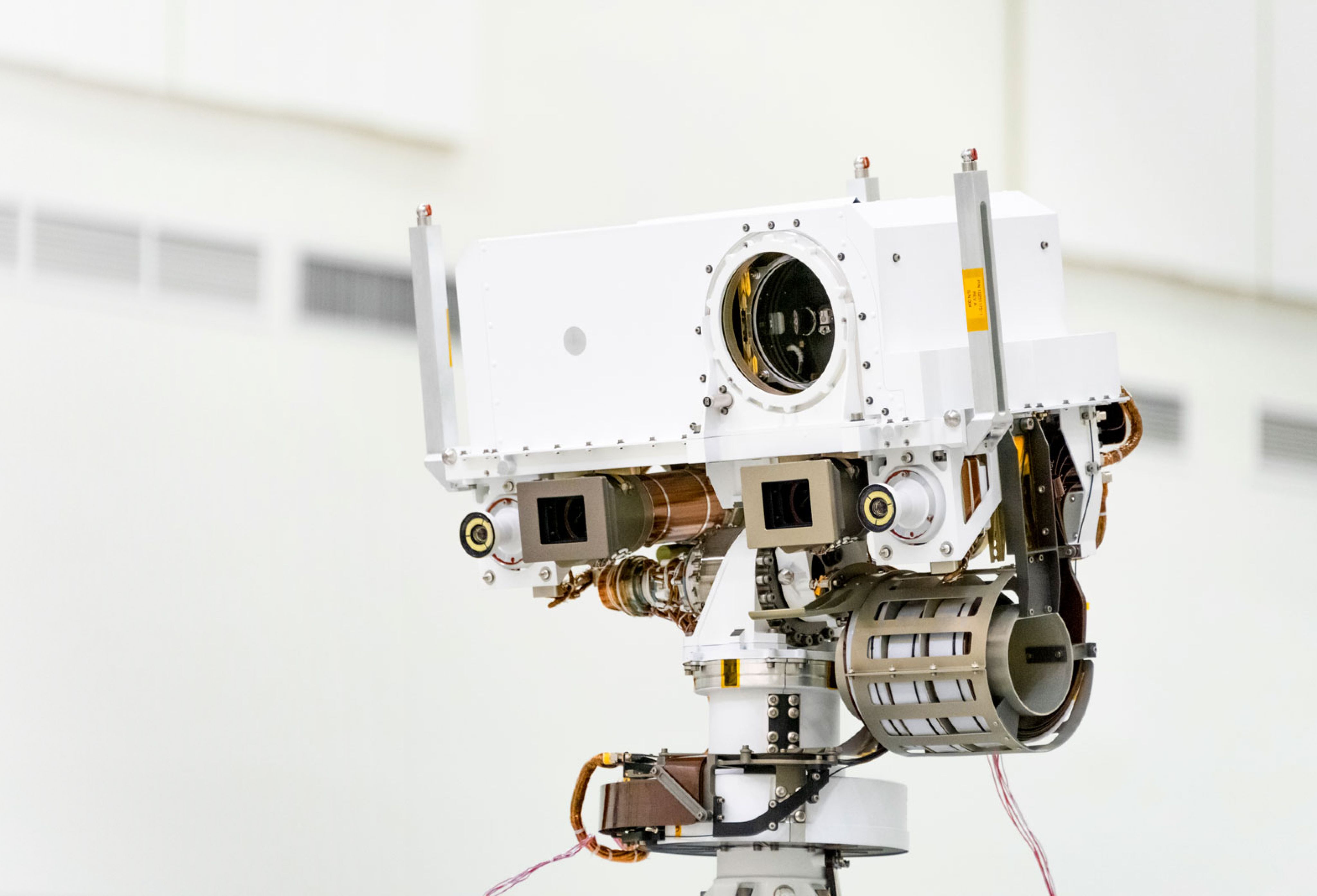

Spacecraft Cameras

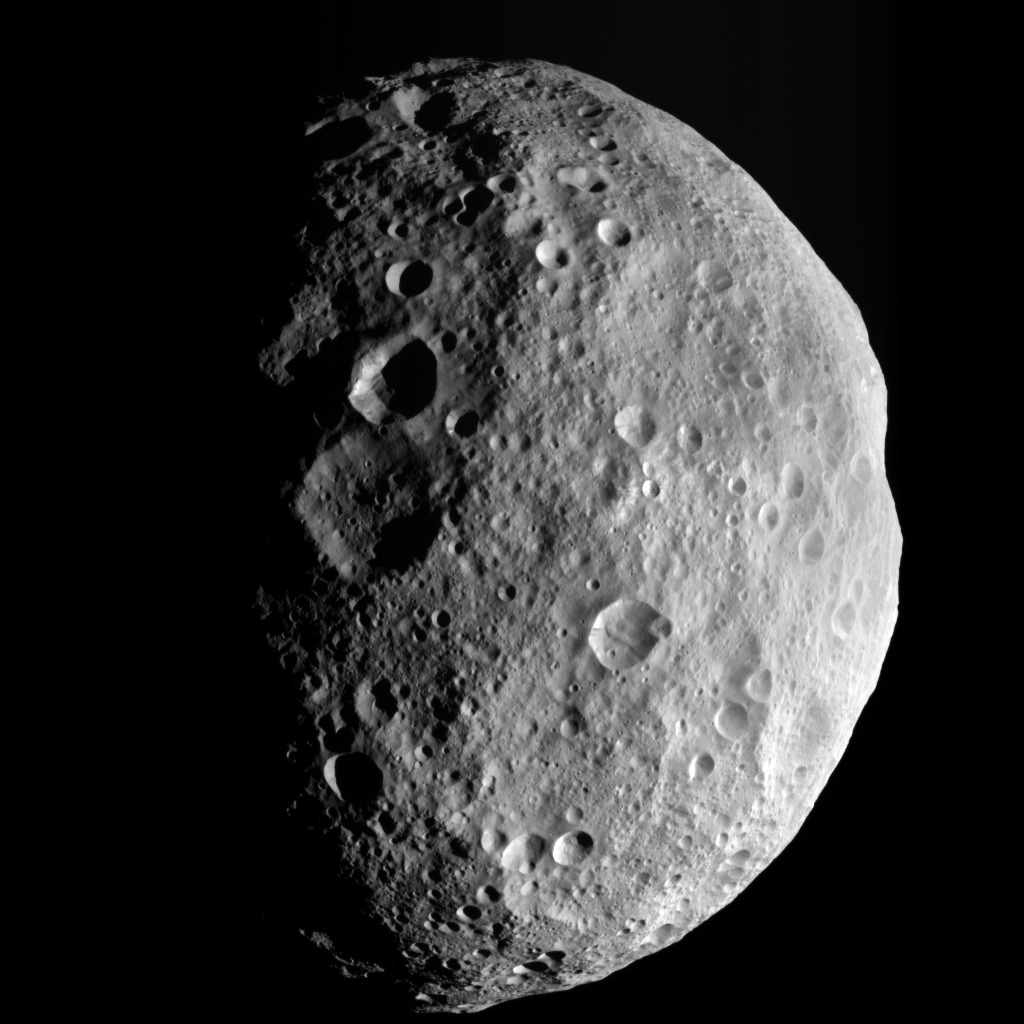

What are we seeing when the cameras are turned on before spacecraft arrive at their destinations? What are the white dots and white lines seen in these images?

During the cruise phase of a NASA solar system exploration mission, a spacecraft's cameras are generally just taking test images of deep space. Many of the white dots in such images are stars – some bright ones, many dim ones. We know which stars they are because we know the part of the sky the camera was pointed to when each image was taken, and we can look up those stars in standard star catalogs.

Some of the white dots are in places where there are no stars, and there are several sources of the dots. Many of them are from cosmic rays – high energy particles emitted by the Sun, or by distant objects like supernovas or quasars that randomly run into the camera's sensors and light up one or more pixels. When a cosmic ray hits a pixel head-on, it will light up just that pixel. But if it strikes at an angle to the sensor, it can light up a line of pixels, creating a streak in the image. The longest streaks come from cosmic rays that hit the sensors at grazing angles.

Some of these non-star white dots are what we call "hot pixels" – small numbers of pixels out of the many in each camera sensor that, for one reason or another, are much more sensitive to light than other, average pixels. They have high signal levels (appearing as white in the raw images) even in places with no stars. For example, NASA’s Psyche spacecraft cameras have nearly 2 million pixels in each camera sensor, while the Cassini imaging cameras had over 1 million.

Why Do Spacecraft Cameras Use Different Color Filters?

Each image is taken with one or more filters positioned in front of the camera sensor. This is similar to how photographers on Earth attach filters to their cameras to achieve special effects in their photos. Another analogy is red and blue lenses in 3D glasses that allow only wavelengths of red or blue light to enter your eyes.

Most digital cameras carried by spacecraft take monochrome images using different color filters, and multiple images can be combined later by scientists on Earth to produce processed image products, including color images. The filters are specially designed to allow only certain wavelengths of light to pass through including red, green, blue, infrared, or ultraviolet. Some cameras also have polarized light filters.

Humans see color in visible light. Consumer digital cameras, including those in smartphones, can take color images meant to capture scenes similar to how we would see them with our eyes. But space imaging cameras are specially designed for science and engineering tasks. They use color filters (also called spectral filters) to reveal how a scene looks in different colors of light. Studying how the Sun, a planet, a moon, asteroid, or comet appears in different colors makes these filters a powerful tool in imaging science.

Color filter images can be analyzed individually, or compared, to learn a wide variety of things. For example, researchers might choose to evaluate how much brighter features on a moon's surface appear in one color versus another, or in the same color but with different polarized light filters added. Such comparisons might reveal new things about the texture, age, or composition of those features.

How Are Images From Space Different Than My Camera's Images?

Images from space missions are a type of scientific, or engineering data. On their simplest level, images are a grid of pixels of varying brightness. The full-quality (archival quality) images scientists and engineers use for their work are uncompressed. This means two things:

- The images have full-image resolution, or original pixel scale, as received from the camera on the spacecraft.

- The images have their original, broad range of shades of gray, also known as bit depth.

These qualities are important for someone using the original data for technical work.

Archival-quality data also include information similar to the metadata a consumer digital camera might save as part of its image files – things like the precise time the image was taken, the exposure duration, and whether a flash was used. But with science image data, the metadata is much richer, in order to aid researchers in their analyses. They include things like the spatial scale in the image; the latitude and longitude on the target world shown in the image; the angle formed between the spacecraft, the target, and the Sun; and other information.

These original, full-quality images are usually in IMG format (meaning they have .img at the end of the filename), and require specialized software to open and work with them. There are a variety of tools for viewing IMG format images, many are linked here: Software, Tools, Tutorials & Viewers.

What Are the Formats for Raw Images?

Raw images are generally provided by NASA in image formats like JPG and PNG, which are file types most internet users are familiar with. These require no special software to view, and they're easy to work with in common image processing applications. These file formats are not as high in quality as the archival versions of the images; they are compressed. JPGs are compressed to make smaller files, which affects the image resolution in particular, and PNGs are reduced in quality with regard to the number of shades of gray they display (typically downgrading from 16 or 32 bits in an IMG file to 8 bits in a PNG file).

So, while some information, or detail, is lost in compressing images, the benefit is that raw, preview versions of imaging data are available soon after they hit the ground.

In some cases, raw images are mildly contrast-enhanced, compared to their science data counterparts.

Can Raw Images Be Used for Science?

Yes and no. Since some original information is lost during compression, raw images are generally not suitable for detailed scientific analysis, as fine detail and precise pixel measurements matter.

But raw images display a significant amount of detail and provide a useful first look at the image data from a mission, in a way anyone can access. They make it easy to see, at a glance, the abundance of different surface features, and the shapes and structure of features revealed in the images. Trained researchers don't use raw images for detailed scientific measurements, but there's a lot of useful, high-level information in such images that might motivate deeper inquiry.

How Do Unprocessed Images Differ From Processed Images?

Unprocessed, or raw, images are similar to images from your smartphone before you crop, adjust contrast and color, or apply filters. Processing images – that is, using software to enhance details that are present in the data or to calibrate them for scientific use – is done for scientific analysis as well as for sharing their beauty and excitement.

As with any sort of photography, there's an element of artistry to space image processing. Enhancement of faint details, colors, contrast, and other qualities is standard, but it's done to share what's in the image, not to add something that's not there.

Scientifically, beyond analyzing the content of the images, there are lots of things processed image data can be used for, including:

- Natural color and enhanced color image products

- Maps and panoramas

- Mapping of shape and topography

- 3D images (also called stereo anaglyphs)

- Animated/time-lapse movie sequences

- Both raw and processed images can provide context for science data collected by other instruments. This is important, because much of the most meaningful discovery by space missions happens as a result of multiple instruments and their teams working together to understand what their overlapping investigations are revealing.

Are NASA's Raw Images Copyrighted?

Raw images from NASA are not copyrighted. They are made available per the agency's media usage guidelines.

Processed image products made using raw images from this or other NASA websites may be copyrighted by creators, subject to U.S. and international law, but no claim of ownership may supersede the public's right to use the original images themselves. For example, two different creators may independently produce similar-looking color image products using the same raw images.

How Do NASA's Processed Images Differ From Images Produced by Citizen Scientists?

Interested members of the public process the same images as professionals on NASA missions and create unique versions of these views. NASA enthusiastically supports this form of engagement.

Imaging scientists on NASA missions have access to original, uncompressed, science-quality images as soon as they arrive from the spacecraft. So they're able to make the highest-quality processed image products more quickly. Citizen scientists have access to raw, compressed images until the archival quality data are published in NASA's Planetary Data System, generally six months to a year after they are downlinked to Earth.

Image products made using raw images can be of extremely high quality. It's also not uncommon for citizen scientists and space image enthusiasts to process and share their image products faster than scientists do. Scientists utilize specialized software to calibrate science images, ensuring the images are free of artifacts and that brightness of features is accurately represented. But once the archival data are published, citizen scientists can perform the same calibration, producing images of potentially equal quality and accuracy.